Interpretable Personal Loan Default Predictions

Increasingly, stakeholders, customers, and regulators of financial institutions are demanding that decisions about financial products such as personal loans are not left entirely to machine learning. The effect of bias and unknowns is simply unacceptable when it can unfairly affect the livelihood of real people. Thus training models that are interpretable is paramount.

With interpretable models, a loan officer will ultimately make the choice of approving a loan after looking at the model’s predictions, knowing the precise reasons for a model’s approval or rejection of a customer. This can be achieved by

- Auditing for bias, legal issues and ethical violations: and notifying the bank when this happens

- Identifying missing context: help improve the model by recommending missing features

- Confirming generalization of the model: if it makes predictions that a loan officer relates to the holistic context of the loan, chances are it is generalizing well

- Reverse engineering the decision: some of the parameters used to make a loan default prediction can be modified at the loan officer’s discretion. Tweaking a value in the loan application can turn a “no” into a “yes”, so being able to reverse engineer a choice may reveal whether the model’s prediction was a true positive or a false positive.

To carry out this project, I:

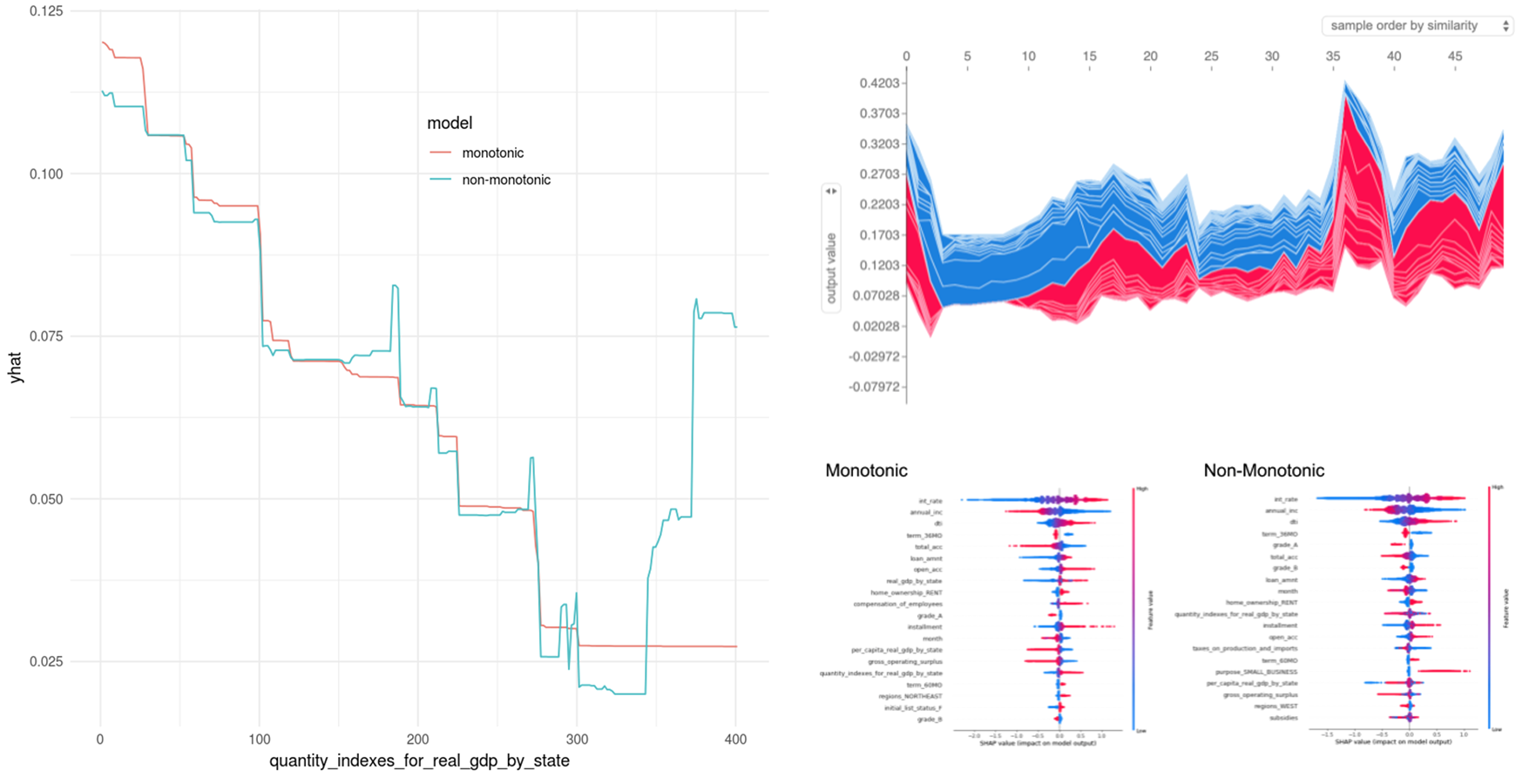

• Implemented two high performing interpretable models using cost-sensitive learning methods, Bayesian hyperparameter tuning, and cross-validation to train a large unbalanced dataset of loans to predict defaults while increasing profit lift. One was a monotonic XGBoost model and the other an eXplainable Neural Network (XNN) with additive index models.

• Explained models on both a global and local level with SHapley Additive exPlanations (SHAP), local interpretable model-agnostic explanations (LIME) and leave-one-covariate-out (LOCO) methods.

Summary

More and more, machine learning algorithms are taking over deciding whether a customer will get approved or denied for a personal loan. However, a loan officer may not actually know why a customer was denied. This can be a big problem when a customer may have been potentially unfairly denied because of biases built into the algorithms. I thus set out to making personal loan predictions interpretable.