3D Scanning Via Photogrammetry with Automated Stage

3D printing is common enough now that many people think of it as an option in order to create objects they desire. However, usually this process involves either finding an already designed object online, which may cost money, or designing the object oneself, when the vast majority of people do not know how to. What if you could take an existing physical object that you own and make a 3D version of it to then 3D print?

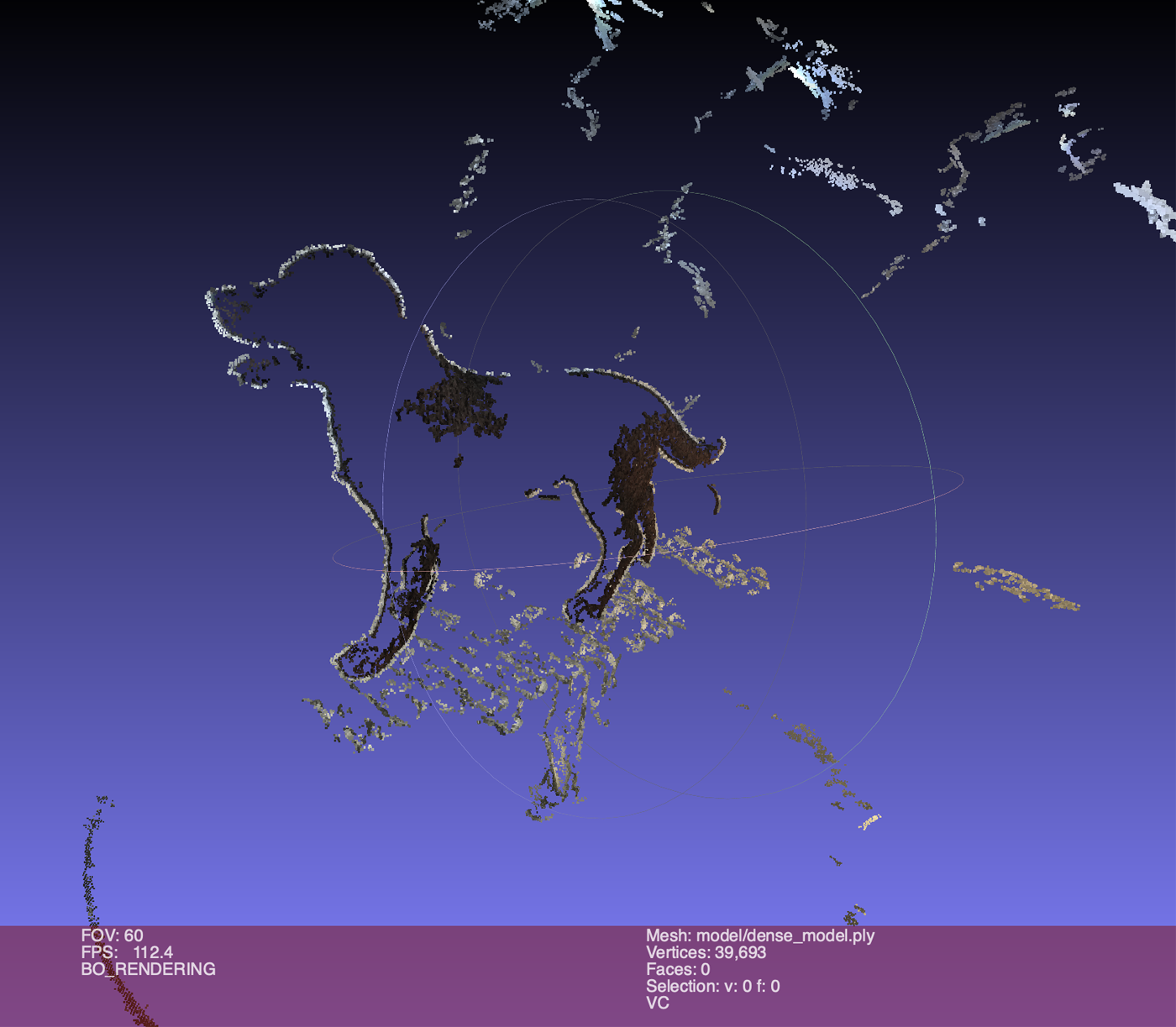

Over the course of the annual Formlabs hackathon, I designed a cheap 3D scanner which could in principle be implemented by anyone with basic hardware and software skills. I wrote a program that rotated and changed the height of a real physical object. While this happened, pictures at different angles were taken. Using stereo matching, epipolar geometry, and depth disparity (photogrammetry) from the photographs, the machine produced a point cloud. This point cloud could then be used to derive a 3D model.

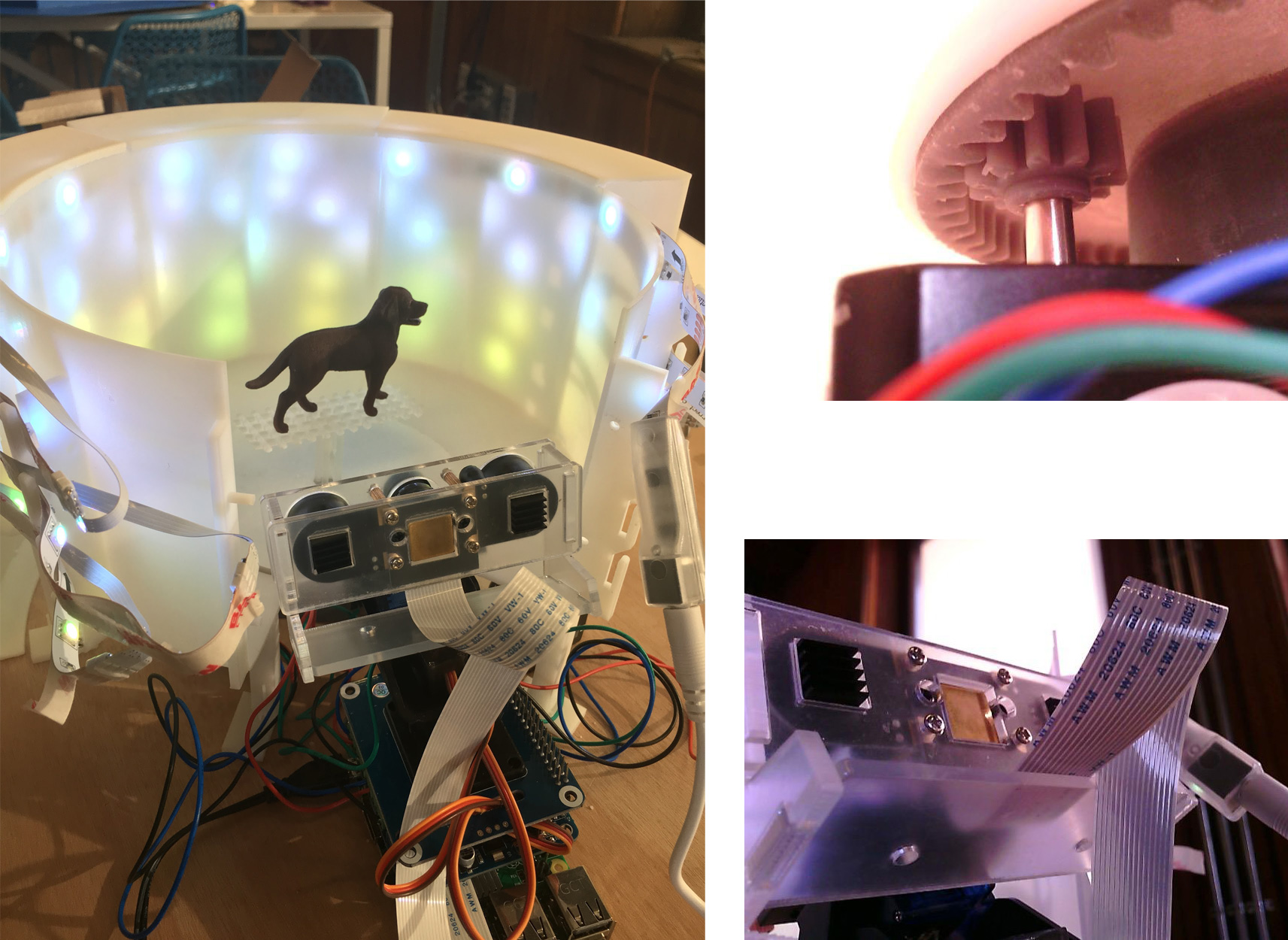

• Hardware: A Raspberry Pi attached to a motor controller directs several motors that position the object to be scanned with an autofocus high-res camera illuminated with LEDs. The object lives on a 3D printed lightbox with a rotating automated stage. The lightbox produces strong yet balanced lighting with a solid matte color for masking (minimal shadows, no over-saturation).

• Software: Python with OpenCV to perform computer vision calculations and libraries to control the motors running on Raspbian OS.

Summary

Each year there's a hackathon at Formlabs. Due to my interests in computer vision and its obvious connection to 3D printing, I devised and executed a cheap 3D scanner that creates a digital 3D mesh from a real-life 3D object.