Audio-Assisted Lip-Reading System Using LSTM Artificial Neural Network

The deaf and hearing impaired (10 million people in the US) have a difficult time immersing in live speaking events in real-time. Although this population is 4 times the size of those in wheelchairs, American Sign Language (ASL) or in some cases just written notes are required as accommodations. However, only a fraction of the functionally deaf—less than 500 thousand—speak ASL and notes unfairly prevent any rich engagement with the event. And this is for events that even provide simultaneous ASL interpreters or written notes. What about stand-up comedy shows? Meetups? Debates? Conferences?

To address this issue, myself and a colleague named Srirakshith Betageri, created an audio-assisted lip-reading system that would allow the deaf and hard-of-hearing to enjoy and engage in live events that could be implemented in a straightforward and iterative way. Specifically, we:

• Prepared a dataset of over 1,000 hours of labeled video footage locating the mouth in each frame, cropping it and stitching it together as an audio-less video while extracting mel-frequency cepstral coefficients from audio (these are a set of 10-20 numbers that capture a frequency envelope in a condensed form, allowing the analysis of audio data much more easily).

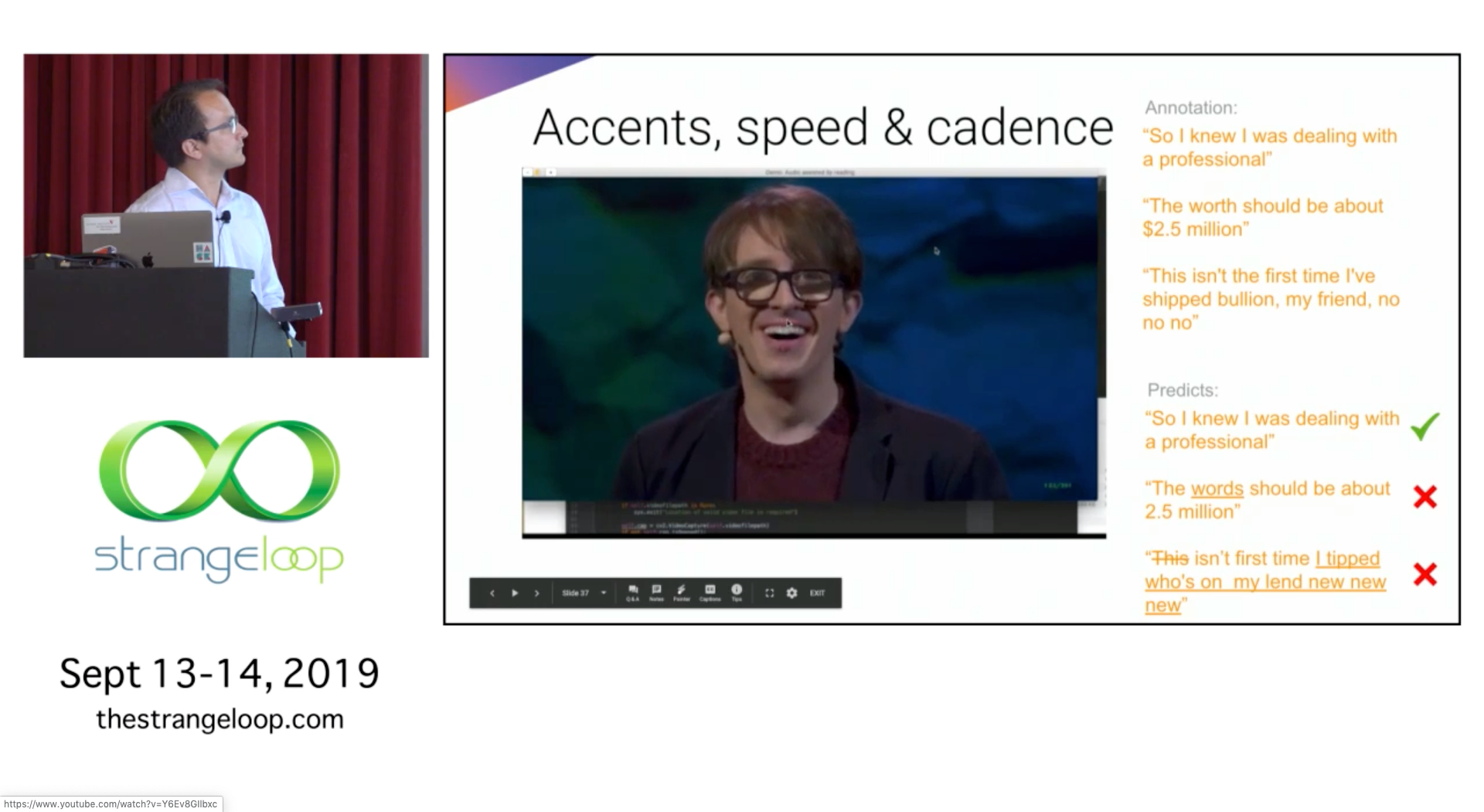

• Trained a recurrent neural network to turn separate video/audio streams to English language transcriptions of what was said using a dataset of 4,000 hours of labeled video footage.

• Produced a python program to use the trained model to transcribe a live video stream as well as a recorded one for testing, either independently or in batch.

• Composed a commercial plan and pitch for this project which won second prize in the entrepreneurial competition Pitch@IIT 2019

• Presented this assistive augmentation project at Strange Loop 2019 and several Chicago MeetUps.

Summary

The deaf and hard-of-hearing have considerable difficulty appreciating most live events because of a lack of accommodations and limitations to existing assistive technologies. To address this issue I created an audio-assisted lip-reading system using a special type of artificial neural network and presented the work at the Strange Loop 2019 conference as a speaker.